How to Maintain Clean Core APIs for Research

Building a library for research and experiments is quite different from building other types of software. A key challenge is that, in research, abstractions and APIs are rarely set in stone: users may want to propose a slight variant or modification to literally ANYWHERE in the whole program, just because they have a new idea.

In deep learning libraries, these variants can be a different implementation of a layer, a change in optimization algorithm, or a small modification to the training logic, etc.

Designing and maintaining these "research APIs" is difficult due to how frequently users want to change their behaviors. Such changes are often implemented by simply adding features to the target API they want to modify, e.g. by adding a new flag to the API, or by adding a new abstraction that generalizes the target API towards the users’ use case.

However, when maintaining a generic, core library meant to be adopted by diverse use cases for a long term, the above approach does not scale and poses many problems (discussed more below).

This note lists a few principles when working with "research APIs" that should help answer:

- How to maintain a clean set of core APIs in research libraries.

- How library maintainers & users collaborate to achieve users’ diverse needs without complicating the core APIs.

Core does not aim to implement all use cases¶

Researchers' job is about doing things in new ways. Hence their needs are so diverse that a core library should not aim to include or implement features for all possible use cases. The library should aim to only include the most popular and standardized features (more on the criteria later).

Core should allow most features to be implemented out-of-core¶

For features not included in the core, there should be a way for users to implement them in a non-intrusive way, often out-of-core as extensions, without too much overhead / repetition.

This requires a continuous design evolution to make the core more modular and composable, so that core code can be reused in users’ new implementation.

A good sanity check for library maintainers is to ask the following question:

For any feature currently in the core library, suppose we remove it today, how much effort would it take for users to reimplement it out-of-core?

A well-designed library should be decoupled such that most of its features are just extensions of itself, and they can be implemented out-of-core the same way as it is in the core.

Criteria for feature inclusion¶

There are 3 criteria for feature inclusion in core, ordered by their importance.

- Popularity: Whether the feature is used by many users

- Standardization: Whether the feature’s API is standardized/agreed among its users

- Simplicity: Whether the feature is simple to implement

To understand the criteria more, let’s ask: what if the feature is —

Popular but not standardized: sometimes a feature is popular, but its users don’t yet align on the proper parameterization, its API, or the subtle implementation details. Including such features is risky, as it may create unclear semantics or impede its standardization in the future. It’s still OK to include it if it’s very popular (popularity is the #1 most important criteria), but try to do it in a composable way and with warning signs.

As a negative example, "Transformer" is a popular but not standarized feature. It's included in Pytorch, but received many complaints, and many projects (e.g. fairseq, detr) eventually have to fork and reimplement their own Transformer.

Simple but not popular/standardized: Simplicity alone is not sufficient for inclusion, no matter how simple it is. Because if everyone adds a simple feature they need, together it becomes complex.

Popular, standardized but not simple: Simplicity is the #3 important factors. If something is complex but very popular & standardized (e.g. BatchNorm being a headache for DL library developers), it should be included. In fact this is where a library could provide a lot of value to users.

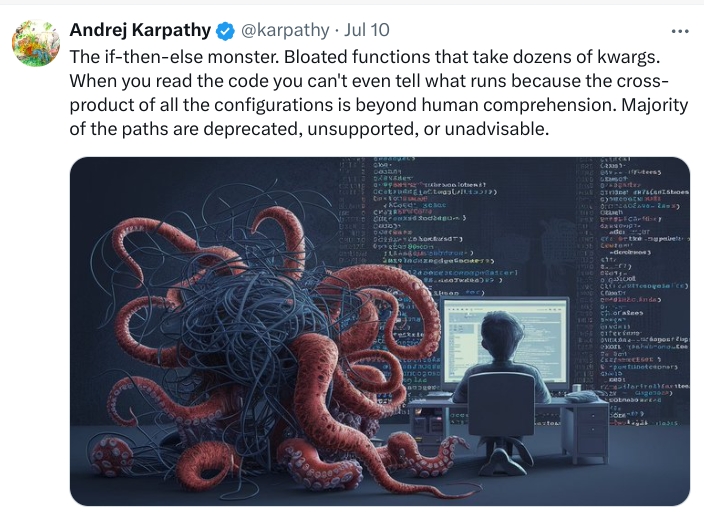

Concern of New Arguments¶

When a user wants to change the behavior of a "research API" def func() defined in core,

adding new arguments is often the quickest way to get things done. But it may introduce a number of maintenance problems.

Simple Flags / Options¶

| New flag | New argument | ||

|---|---|---|---|

|

|

Adding a simple argument to control the behavior like above is OK, if we think that the new option is very clear and popular. But as a "research API", many users will want to add their own customizations. This could lead to the following problems:

- Poor Code health: The library may gradually accumulates too many features that are:

- Hard to read due to branching (as there are too many flags). Ideally, readers should not pay too much extra mental overhead for logic they don’t care about

- Hard to maintain because the contextual knowledge about them is distributed among different developers

- Confusing behaviors: More and more features added over time may not interact with each other in a clear way,

causing confusing or silent wrong behaviors

- E.g. featureA becomes a no-op when featureB is enabled

- E.g. featureA and featureB are conflicting / overlapping in semantics

- E.g. featureA’s semantics becomes undefined when featureB is enabled

-

"More general" may become "less general": A common argument for adding options like this, is that it doesn't change existing behavior and "makes the function more general".

However, keep in mind that when a function becomes more general in one aspect, it's often less general in other aspects. Generalizing towards one direction may not be a net win, because research code has too many possible directions to generalize towards, and picking one direction may affect its eligibility to pick others in the future. We will show what this means shortly.

Callbacks¶

To add a new behavior to func, one can also add a callback argument:

| Inject custom behaviors through callbacks: | Use object.method as callbacks: | ||

|---|---|---|---|

|

|

This appears useful, since the custom logic is not implemented in core,

but in a user-provided callback.

For example, given the original code below (left), a researcher who wants to compute y differently may propose

a compute_y_fn argument like below (right).

| Original: | With callbacks: | ||

|---|---|---|---|

|

|

However, this design often turns out to be more problematic:

-

Premature abstractions: Assumptions/constraints are implicitly created about where the callback is triggered, what arguments it needs and what it returns. These assumptions may be bad.

In the example above, a 2nd researcher may want to compute

yusing bothxanda; a 3rd researcher may want to computey, zin one functioncompute_y_z_fnbecause it's more efficient. These variants conflict with the 1st researcher's design.In the future, after seeing enough use cases, we might realize that a

xyz = compute_xyz(a)is a truly good abstraction. However, at that time the premature abstraction ofcompute_y_fnwill get in our way implementingcompute_xyz. In other words, although the current design makes the computation ofy"more general", the abstraction limits our ability to generalize the function in other ways. That's why we said earlier that "more general means less general". -

Obscure logic: readers can't easily figure out what this function does by reading its code: they need to look at the caller of this function to see which callback is supplied, and then look at the implementation of the callback function. The aforementioned issue of "confusing behaviors" also applies here.

Sometimes callbacks are good and useful abstractions. But because it is too powerful, I saw it frequently abused to alter a behavior into something that's strongly overfitted to a small number of use cases. This happens especially often in research code. In code reviews, I usually frown upon APIs that require callbacks/user-defined functions.

Prefer forks over new arguments¶

To customize a "research API" def func() defined in core, we have the following options:

- Out-of-core, e.g. a

def func_v2()in user code. (Or aclass ClassV2for classes). - In-core, but keep existing APIs unaffected, e.g. a

def func_v2()in core. - In-core, and change existing APIs, e.g. a new option in

def func(option).

The best choice is heavily subjective and should be evaluated case-by-case.

Due to the concern of new arguments,

in general we recommend methods (1) and (2), i.e. prefer forking func() over changing func().

- If a fork will create significant code duplication, choose (2) and try to reduce duplication with private abstractions (see next section).

- Adding flags / simple args is acceptable for simple, clean, popular additions.

- Adding callbacks / new abstraction requires scrutiny, and should come with more than a handful of use cases in mind.

This also echoes Flax design philosophy that says "prefer duplication over adding options / bad abstractions".

Accept duplication, but aim to reduce them later¶

Users/developers may find that the core design is not good enough yet, and recreating a variant

of func() without touching it may lead to too much code duplication.

For example, ... is duplicated between the two functions below.

| Existing API in core | New variant | ||

|---|---|---|---|

|

|

Such duplication is acceptable for a short term. We do NOT mean to encourage users to heavily fork core code. Instead, users and core developers should engage and aim to evolve the core design to reduce duplication — but design change takes time to happen, and duplication is preferred before a good design is found.

How to reduce duplication¶

The most risk-free way to reduce duplications is by moving them into shared reusable code:

| Existing API in core | New variant | ||

|---|---|---|---|

|

|

This should be the preferred way to reduce duplications. The benefits are:

- No change to the API of

func(), hence little risk. - Create reusable sub-routines that may be useful to new use cases.

However, there are also challenges:

- This adds a new API (

_reusable_parts()) to maintain. - Sometimes it's difficult to identify a clean & reusable subset that can be easily split from the duplicated code. It may require small refactoring to expose a clean subset. Also, remember that the approach that reduces the most duplications might not be the one with the best abstraction.

The above challenges are less significant if _reusable_parts() is private. Therefore:

- If

func_v2()is in core, make_reusable_parts()private. - If

func_v2()must be out-of-core, consider_reusable_parts()as "internal/experimental APIs".

Inheritance, e.g. class ModuleV2(ModuleCore) may also reduce duplication between two variants.

However, this is generally less preferable than composition like above. The reason is similar to

why callbacks are not preferred: overriding methods is like passing callbacks - they are both user-defined

functions and suffer from the same limitations: users are constrained by the assumption of

when/where/how the methods/callbacks are triggered.

Prefer branching at shallower code path¶

It is discussed that we prefer "adding a new implementation" over "adding new conditional branches to the existing implementation", but sometimes branching has to happen somewhere anyway - to choose between the two implementations.

If branching has to happen, we prefer it at earlier, shallower code path:

| Branch earlier | Branch later | ||

|---|---|---|---|

|

|

By branching earlier, we keep a clean func() unaffected by the new variant.

This recommendation is consistent with the preference to fork func_v2(), not to add flag to func().